I’ve been testing out newly released services in GCP which are designed to allow builders to create a DataMesh.

What is DataMesh?

In a nutshell, it’s an architecture where datasets are seen as ‘data domains’ or ‘data products’. What does this mean exactly? As usual, the devil is in the details. In my (client’s) world, it means a set of service which allow you to organize, secure and tag disparate data across multiple GCP projects.

In GCP you can include the following types of data: tables, datasets, models, data streams and filesets. Another aspect is that these types of data are present in a number of GCP systems. Supported systems are these: BigQuery (datasets), Cloud Pub/Sub (messages), Cloud Storage (buckets), Data Catalog (metadata), Dataplex (a top-level aggregating/indexing system) or Dataproc metastore (more metadata). The first exploration is to understand what exactly each of these types of data is. To get started I enabled the GCP Dataplex API and navigated to the main (Search) page (screen shown below).

I noticed that the ability to ‘star’ entries is highlighted on this main page.

Why use a DataMesh?

Most bioinformatics workloads use a Data Lake pattern due to the sheer scale and size of genomic files. DataPlex is designed to work with GCP DataLake, in fact the ability to ‘Manage Lakes’ is integrated into the DataPlex main menu. This menu has three features: Manage | Secure | Process. The concept is that you have so much data to manage that you would like perform these operations at the level of a group - a lake, or a subset of a lake (which is defined as a zone in a data lake).

In addition to the ability to assign permissions to associated data assets (buckets, datasets, etc…) grouped by lake or zone, DataPlex includes a higher level set of constructs for data asset management. This is in the ‘Manage Catalog’ section of the DataPlex interface.

Lake Management

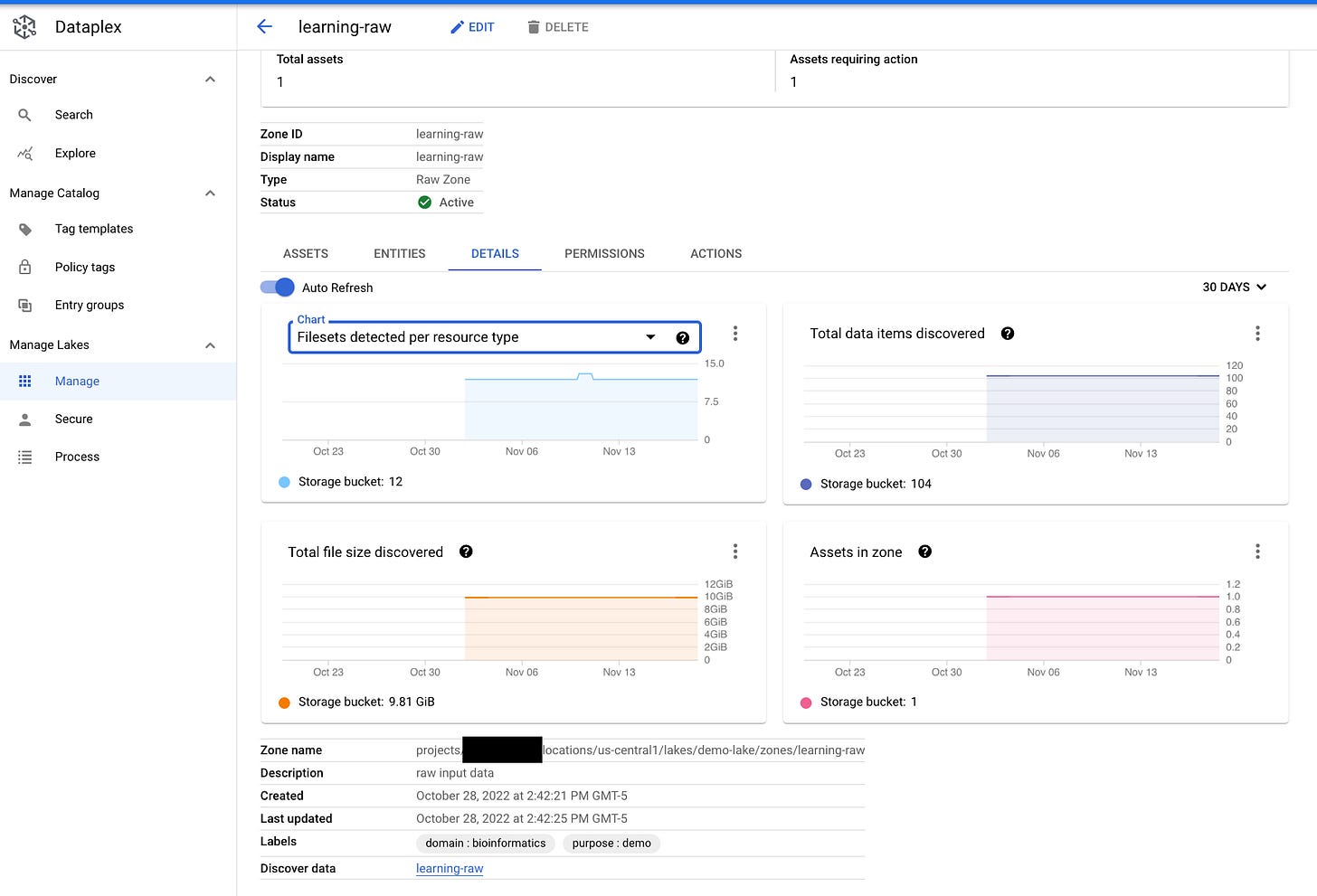

In the ‘Manage Catalog’ section you can create Tag Templates, Policy Tags or Entity Groups. Shown below is an example of a GCP Data Lake zone (named ‘learning-raw’) which includes all contents of one Cloud Storage bucket. Of course, you could put multiple data asset instances into this one zone.

How to build a DataMesh in GCP?

After enabling the DataPlex API, I started building. I created some tags (templates and policy-based) and entity groups. Next I created a data lake and reviewed the default security. I can really see where managing security at the grouped data asset level (i.e. data lake/zone) rather than on each individual asset (i.e. storage bucket, BQ dataset, etc…) has the potential to improve operational efficiency.

Of note also is the ability to define process tasks for data assets in each Data Lake. Built in processing tasks (many, but not all, use GCP Dataflow [managed Apache Beam]) include the following:

Convert to Curated Data Format

Tier from BQ to GCS

Check Data Quality

Ingest to DataPlex (uses GCP DataFusion)

Custom Apache Spark task

Exploring the DataMesh

The results? A kind of ‘google-for-my-data’. Shown below is an example of using the filters to search for data assets that meet my filtering criteria. You can see I’ve selected the following combination of filters: “Everything, Fileset, Lake, Table and Public Datasets” + the search term “germline”.

It’s interesting to see that results include the following:

BigQuery public datasets

Data Lake assets (file buckets) in one of my GCP projects

BigQuery datasets in another one of my GCP projects

The promise of being able to provide bioinformatic researchers with a searchable and gated data asset index is exciting! Enabling quicker access to data resources will let my customers get to work using that data to perform health informatics with less friction.

Learn More

This is just a first look. I’ve collected notes and links - here. I’ll be building more examples too. Share your tips/thoughts on building a cloud-based DataMesh too!